Surround Vision

9:37 PM - After your car can find a space in a lot it’s never been in before, your phone, Jen-Hsun, said could be used to summon the car again.

Jen-Hsun wraps things up by saying we announced three things today: Tegra X1, world’s first one-teraflop superchip. It’s necessary to build our next announcements; DRIVE CX, to create beautiful digital-cockpit technology; and DRIVE PX, which is the platform by which self-driving cars will be built.

For more on what we’re up to at CES, visit nvidia.com/CES2015.

9:34 PM - So, as Jim wraps up, he shows how the car can, on its own, find a space in the parking garage traveling 5 miles per hour. This draws a round of hoots and applause from the audience.

9:31 PM– These views get translated into video input which DRIVE PX uses to recognize the environment around it.

Jim next takes the demo to another level. He shows something called NVIDIA DRIVE PX Auto-Valet. The car navigates its way through the parking garage and see all the objects around it. This modeling of the 3D environment around the car is an important building block to path finding – that is, the car finding its own way forward.

By putting all these pieces together, Jim shows how all the images being generated to enable the car to find its own parking space in the parking garage.

9:26 - He introduces Surround Vision.

Jim, a third NVIDIA researcher, shows a simulated 3D version of NVIDIA’s parking garage in Santa Clara, with a bright red Jeep driving around it. The parking garage gets reconfigured so there are different cars in different spots, and then different model cars switching in and out of the same space.

There are four fisheye-camera lenses on each of the four sides of the Jeep, which stitch perfectly together into a 360-degree crow’s eye view of the car and what’s around it.

9:23 - One last thing, Jen-Hsun says…which will combine everything that’s been talked about thus far.

Road Trip! In Gear with Audi

9:20 PM - Ricky now talks about autonomous driving. In October 2014, the company had the first autonomous car driving at an F1 test track at 240 kilometers an hour. Today’s there’s a milestone in our development of piloted driving, we’ve started the first long-distance piloted driving tour from Silicon Valley to Las Vegas with five U.S. journalists. They finished the first day and are now in Bakersfield. They should arrive in Vegas tomorrow.

9:17 PM - Ricky talks about the Audi Prologue, a concept show introduced at the LA Auto Show two months ago. It has a massive, yawning virtual cockpit, with a touch display in the center.

“What I need from NVIDIA is computing power, lots of pixels,” he said. Responds Jen-Hsun, “Anyone who needs more pixels is music to my ears.”

9:15 PM - Jen-Hsun now introduces to the stage Ricky Huddi, Audi’s executive VP for electronic development. His company has been a huge partner of NVIDIA, pioneering much of the most important technology in cars today.

Ricky says, ”a lot of the things you’ve mentioned already about visual computing and driver assistance systems has a lot of common DNA of our two companies, which we’ve created in the past two years.”

Ricky says that one of Audi’s biggest challenges was to speed up the development cycle of auto computing so that it matches that of the consumer world, especially in infotainment and cluster areas. He talks about how Audi can implement cutting-edge NVIDIA technology within just a year. Now, this year, we’re bringing the Audi smart tablet on the market. And in a year, we’ll bring out products with the K1. “We’re not talking about this in the future.”

Thus, what used to take five years of development now takes just one year, which puts Audi at the same pace at consumer electronics companies.

“This level of speed and flexibility….is really a paradigm shift for the automotive industry,” Ricky said.

Deep Learning Hits the Road

9:08 PM - What comes out of the supercomputer is a trained deep neural network. A pair of X1s in NVIDIA DRIVE PX can classify up to 75 objects at the same time, enabling it to understand the environment around the car. When an unknown object is identified, the data center gets alerted, and it works to figure out what the object is through deep learning. Once it figures this out, it conveys this information across the network to all the cars in a brand’s fleet.

9:04 PM- Mike now shifts to a daytime street scene filmed in Vegas. The system identifies vehicles by what they are – trucks, SUVs, sedans, vans. These identifiers jump out.

Says Jen-Hsun, If we were to engineer each feature detector, it would take a nearly countless number of engineering tasks. These sub-classifications are made with a deep neural network – which took about 16 hours to be ‘learned’ by a Tegra X1. He now shows how the system can identify a police car, flashing its lights in your rear view mirror.

Now that we can recognize pedestrians, cars, animals, you thus become increasingly situationally aware. Then you can apply algorithms to determine the best course of action for a car to take

8:58 PM– Another NVIDIA researcher, Mike, shows how deep neural network-based computer vision can recognize and read a speed limit size, as well as pedestrians. But he says there are more difficulty things to recognize, like partially blocked pedestrians. He shows a scene filmed near the San Jose Courthouse – while the video was recorded earlier, the deep neural network is processing information in real time.

Jen-Hsun notes that while there are a bunch of lights, the system can identify lights that are red, green or yellow.

Mike now shifts to a scene shot at night in poor weather on a UK expressway. The system can detect, for one thing, a speed camera. But it can also detect numerous objects at the same time, including congestion up front, and where a break in traffic is occurring.

8:52 PM– Once you train this system, and you ask it what’s a car, it can recognize different types of cars and very quickly becomes highly efficient.

He now shows what appears to be a picture of an intersection of an older suburban neighborhood with one story homes. It shows how the deep learning system can learn to recognize a pedestrian, even if they’re partly blocked by a car. He also describes how it can detect what’s a car behind you or an ambulance behind you – or a school bus in front of you.

“The context of these objects is highly important,” he said. He now shows how Tegra X1 has learned in recent weeks what a crosswalk sign is, and ‘see’ it from very far away.

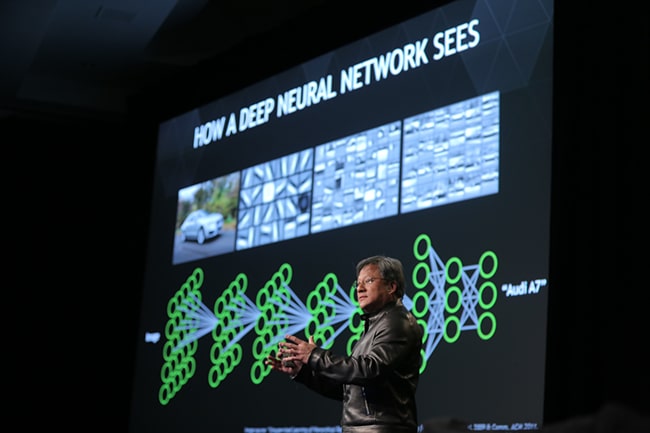

8:48 PM– Jen-Hsun steps back and describes how a deep neural network sees – how it can glimpse a moving silver streak, filter it through its layers of learning and then quickly identify it as an Audi A7 sedan. This process replicates in some fashion the way the brain works, by seeing a blur of black and identifying it after extensive training as a tire. To be able to learn to classify objects can take extensive training.

8:45 PM– With all this data coming in, Computer Vision technology is critical. We’re announcing Deep Neural Network detection, which can learn to identify different objects. In the past something like this had to be hard coded by engineers.

But if you want your car to be situationally aware, it needs to detect more than just a few objects that are hard-wired into it.

The GPU lets researchers around the world use deep learning in a productive way, according to a recent major paper in Wired magazine. Other key enabling factors are voice recognition and sophisticated new algorithms. But GPUs have increased the precision of deep learning dramatically. Now, GPU-accelerated deep learning can identify objects better than most humans.

Introducing NVIDIA DRIVE

8:40 PM– The new NVIDIA DRIVE PX can connect 12 cameras – say, 3 in the front with different views; others on the rear and sides. They’d all feed into the DRIVE PX. It can do extremely sophisticated image processing and record the information into a data recorder inside the car – recording dual 4K at 30 hertz.

8:38 PM - With a powerful enough processor, Jen-Hsun says, a car can integrate all the information coming into it and become situationally aware. That’s necessary for self-driving car, so is the ability to do real-time software updates.

Our vision is that all these inputs come into a mobile supercomputer. We call that NVIDIA DRIVE PX. He holds a board about half the size of a sheet of paper.

On it are two Tegra X1s. This gives 2.3 teraflops of processing power, can connect up to 12 cameras and can process 1.3 billion pixels a second.

8:36 PM– As cameras get better, we can imagine radar assistance getting replaced by smart cameras, which can warn us of road signs, pedestrians, oncoming cars. But as the sensors become more powerful, they’re also becoming more efficient. Replacing sensors with cameras and unifying the information they receive would let us do something magical. And that’s the road to self-driving cars.

8:34 PM - Justin scampers off the stage and with it the demo DRIVE CX, which includes the hardware, middleware and creative tools, as well as the horsepower of the Tegra X1.

Jen-Hsun shifts gears now to talk about state-of-the-art ADAS – advanced driver assistance systems – which make for safer driving. Aspects include lane-departure warnings, collision-avoidance systems, blind-spot detectors. It’s evolved a lot, enough to even permit parallel-parking assist. It’s made possible by radar (which can go very far at anytime day or night), ultrasound technology (which is low cost and lets you do sensing at close distance) and increasingly vision (which deploys cameras).

Each detector is autonomous or independent. But increasingly it’s all being replaced by camera technology, which is getting better and better.

8:30 PM– Justin now shows what the gauge looks like made of polished aluminum, porcelain and car paint. The switch is instantaneous and allows for shadows and a deep level of subtlety. These are mathematical equations being conduced on the Tegra X1 that show how light bounces off each individual material.

8:28 PM - The gauges reflect shadows from the needle, but they can be re-created with different materials. They can be shown in brushed aluminum or brushed copper, for example.

Justin now shows how you can take real physical swatches of material – there are examples in a small on-stage lab of car paint, bamboo, powder coat, aluminum, porcelain, carbon fiber. He shows how you can take the swatch of material, and it becomes the theme of the rendering.

8:25 PM– Justin, who’s a pretty animated fellow, then shows off the cluster. This is an area we see as the jewelry of the car, where we can use all our graphics prowess. This enables rich, 3D gauges that use physically based rendering. The guages look like carefully wrought, 3D roulette wheels, spinning at an angle, with the subtlety of the glass and metal they’re representing.

8:22 PM - He shows off how you can place information wherever you’d like on the screen. This nav system is fully rendered smoothly in 3D, with the space around the car lit dynamically, which involves ambient occlusion, making it look like an experience out of Tron.

8:21 PM– Jen-Hsun now shows the NVIDIA DRIVE Studio. It’s an infotainment system and virtual cockpit combined into one computer. Any OS is supported.

He now introduces Justin, from the company’s DRIVE Studio program. Justin describes DRIVE Studio as a platform for partners to integrate their technology, so they can translate their vision for next-gen car technology into reality.

First, he shows off the infotainment system. It includes Media integration, text-to-speech, voice control, navigation… all of these things are included.

8:17 PM - DRIVE CX is powered by X1 and includes software we call the DRIVE Studio. This gives designers access to this incredible processing power. DRIVE CX will take computer graphics to a whole new level, Jen-Hsun says. While a car map now looks like a cartoon, that’s going to change fast.

8:15 PM - Current state-of-the-art cars will have around 1 megapixels, but that will grow by the end of the decade to more than 20 megapixels. To meet this demand, NVIDIA is launching today the NVIDIA DRIVE CX, a digital cockpit computer, which can process nearly 17 megapixesl, equivalent to two 4K screens.

For more on what we’re up to at CES, visit nvidia.com/CES2015.

Introducing NVIDIA Tegra X1

8:14 PM– For those who follow these things closely, he said that the X1’s code name was Erista. But the question now is what will it run? The answer is cars – which will have more computing power than anything else we have. Roof pillars can become curved dispalys that let you see through the car. The back seat can be a display. We believe that the number of screens in your car will grow exponentially.

8: 12 PM - If you compared Tegra x1 versus an Intel Core i7, XI uses only a tenth of the power and is in many respects more powerful.

8:11 PM - Tegra X1 has the exact same engine that runs in high-end PCs and a next-gen game console. TX1 is the first mobile chip to provide a teraflop of processing power. That’s equivalent to the fastest supercomputer in the world in 2000. But that system consumed a million watts. Now, we can put that in a tiny chip.

8:09 PM - Because Maxwell is so efficient, it can run things at unprecedented level. Last year, when next-gen game consoles were released, they consumed 100 watts when running Unreal Engine’s Elemental demo. Now we can do it on x1 at 10 watts. The demo shows a waterfall of dripping fire, with a creature who looks like he consumed a volcano, which is flashing from many orifices.

8:07 PM - JHH is now talking about the super energy efficiency of the Tegra X1. While Tegra K1 set new standards for efficiency, X1 is twice as efficient still, according to the GFXBench 3.0 benchmark.

8:06 PM - TK1’s performance was amazing. Though launched a year ago, it’s still the best performner, even compared with the Apple A8, which was just launched.. But Tegra X1 blows it out of the water.

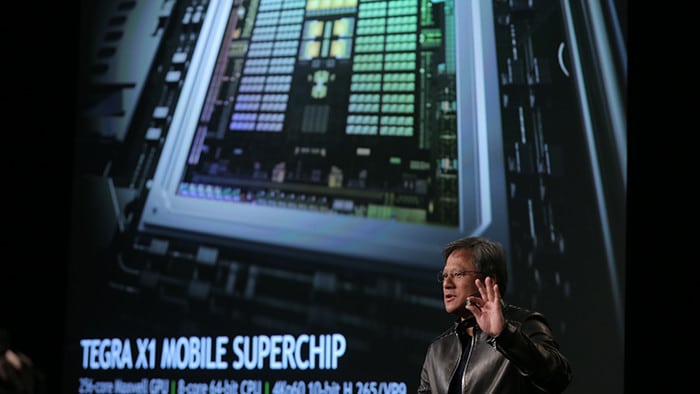

8:04 PM - Yeow, it has 256 GPU cores, and an eight core 64-bit CPU. It can do 10-bit video in H.265/VP9

8:04 PM - A year ago ago at CES we announced Tegra K1, JHH says. It was important because it brought to mobile devices the ability to do things that were hard to imagine.

It took two years to take Kepler to TK1, he says. Four months ago, we announced Maxwell-architecture GPUs, with 2X the performance of Kepler. Right now, we’re announcing that this very same Maxwell GPU will come to mobile, as Tegra X1 –a mobile superchip.

8:02 PM - Right on the money, Jen-Hsun takes to the stage. No shocker. He’s wearing his trademark black leather jacket. Lights dim. Crowd quiets down a bit and applauds.

8:00 PM - We’re a few moments away. Jen-Hsun Huang, JHH, as he’s known, is quite punctual, generally, so it shouldn’t be long. The voice of God on the loudspeaker is beckoning late comers to get seated.

7:57 PM - Pretty cool placeholder slide’s showing in back of the long, narrow stage. It’s the NVIDIA logo against an image of green and black and white brain synapses which seem to be twitching.

7:56 PM - CES doesn’t officially start until Tuesday. But just as Memorial Day’s the unofficial kickoff to the U.S. summer, Black Friday’s the unofficial kickoff to the holiday shopping season, and the swallows returning to Capistrano’s the unofficial start of spring, NVIDIA’s annual Sunday press conference is the unofficial kickoff to CES.

7:55 PM– We’re five minutes out here at the Four Seasons, before Jen-Hsun takes the stage. Room is just about packed, with a couple of open seats but lots standing. A phalanx of cameras in the back. Lots of journos setting up their stories right now.

Broadcast live streaming video on Ustream

We can’t wait to get started.

NVIDIA CEO Jen-Hsun Huang opens our official presence at the 2015 International Consumer Electronics Show, in Las Vegas, Sunday at 8 pm Pacific.

We won’t spoil any of the surprises. But stay tuned. We’ll be live blogging throughout the event. Hit refresh on your browser for updates.

For more on what we’re up to at CES, visit nvidia.com/CES2015.