11:12 – JHH thanks Elon and talks about how much work there is to do, and how committed NVIDIA is to do that. Elon strolls off stage as JHH calls him “the engineer’s engineer.” Things are winding down now.

There’s a recap of the day’s news:

- TITAN X, the world’f fastest GPU;

- the DIGITS DevBox, a deep-learning platform;

- Pascal, with its 10X acceleration beyond Maxwell;

- and NVIDIA DRIVE PX, a deep-learning platform for self driving cars.

And now, the show begins.

11:10 – JHH asks about protecting cars from security attacks, from hacks, like computer networks have been hacked.

Elon notes that there’s great importance for a car to still have a steering wheel and brake, with additional levels of security. Thus, while an infotainment message might be able to be hacked, there’d be much tighter security that would prevent a car’s engine or self-steering system from being hacked.

Tesla is the leader in electric cars and we’ll be the leader in autonomous cars, Elon says. We’re going to put a lot of car into autonomous driving and we’re going to save a lot of lives.

When it comes to AI, I’m not worried about autonomous cars or autonomous air conditioning units, it’s the deep intelligence, Elon says.

Live streaming video by Ustream

11:06 – “We’ll take autonomous cars for granted in a very short period of time,” Elon says.

JHH asks about government policies, saying he’d love to work on email while driving to work, quickly adding that he’d like to do so without breaking the law. What does the government need to do?

Elon notes that it will be several years after self-driving becomes possible that government will allow it. They want a car to be not as safe as a person driving but significantly safer.

“When it comes to public safety, there’s an argument for being quite cautious before there’s a change. I don’t think it’s the case right now that there’s a full autonomous system that regulators aren’t approving. But there could be next year.”

“I don’t think it’s the case right now that there’s a full autonomous system that regulators aren’t approving,” Tesla Motors CEO Elon Musk. “But there could be next year.”

Elon adds that the first thing we try to do is establish a hardware platform so we can do continuous updates to the software. A lot of that will happen later this year. I have an announcement on Thursday, and I don’t want to get ahead of that.

He deflects Jen-Hsun’s kidding effort to ask if he want to share that news, “There’s going to be a call on Thursday on what’s going to be in our next version for anyone that’s interested, that’s all”

11:01 – the conversation between NVDIA CEO Jen-Hsun Huang and Tesla Motors CEO Elon Musk continues…

JHH: what’s your roadmap about how to get to self-driving cars?

Elon: First, you can keep uploading new software, so the car you have will steadily improve with new capabilities. The car will get smarter and smarter with the current hardware suite. Even with just what we have, we’ll make huge progress in autonomy. We can make car steer itself on freeway, do lane changes. Autonomy is about what level of reliability and safety we want. But with the current hardware suite, it can’t drive safely at 30MPH, with children playing. To solve that, we need a bigger sensor suite and more computing power. What NVIDIA’s is doing with Tegra in really interesting and really important for self-driving in the future.

Asked by JHH, Elon begins discussing technological hurdles. It gets tricky around 30-40MPH open driving environment. At 5-10MPH it’s relatively easy because you can stop within the range of ultra-sonics. And then from 10-50MPH in a complex environment, that’s where you get a lot of unexpected things happening. Over 50MPH in freeway environment, it gets easier because possibilities get narrower. So, it’s the midrange that’s challenging. “But we know exactly what to do and we’ll get there in a few years.”

10:56 – We can talk about cars forever, JHH says. But JHH introduces Elon Musk. He says he’s bought all three of Tesla’s cars – the original sports car and two versions of the Model S Sedan.

Elon comes on stage, dressed in black, identical to JHH, though lacking the black leather jacket.

JHH: We made it a point not to rehearse anything. So, let’s get directly to the juicy stuff. You were quoted as saying artificial intelligence is more dangerous than nuclear weapons, like a demon. How do you consolidate and rationalize that with deep learning’s potential?

Elon: We don’t have to worry about autonomous cars. Doing self-driving is easier than people think. There used to be elevator operators, but now we’ve developed circuitry so they go where you want to go. In the distant future, they may outlaw driven cars because they’re too dangerous.

“In the distant future, they may outlaw driven cars because they’re too dangerous,” Tesla Motors CEO Elon Musk

JHH: If we have the right intelligence, we don’t have to make cars that heavy because they don’t need to survive the same collisions, can we relax some of those laws?

Elon: If you can count on not having an accident , you can get rid of a lot things, though we’re still some time away from that. Capacity of cars and trucks is 100M a year and there are 2B on the roads now, so it could take 20 years for the whole base to be transformed. Similarly, it would take 20 years to replace the world’s fleet of cars with electric.

10: 50 – JHH shows a DARPA self-driving car initiative called Dave, which was trained on 225K images. Dave was trained using deep learning on these images to calculate what behavior to do.

He sums it up nicely: “The input was images, the output was drive commands. It’s a deep neural network in action.”

DRIVE PX is based on two NVIDIA Tegra X1 processors, and takes input from 12 cameras.

Instead of Dave’s 3.1 million brain-like connections, AlexNet on DRIVE PX has 630 million connections. While Dave processes at 12 frames a second, DRIVE PX processes AlexNet at 184 million frames a second. Dave’s connections was firing off 38 million times a second, DRIVE PX can fire off its neural capacity at a rate of 116 billion times a second.

To augment today’s ADAS systems with deep learning has enormous potential. This can be done with DRIVE PX, which will be available in May 2015 as a developer kit, for $10,000 – to qualified buyers.

10:44 – JHH now talks about NVIDA’s DRIVE PX Self-Driving Car Computer, which will bring deep learning to ADAS.

It’s going to be very difficult to code if-then scenarios of an infinite number of possibilities to lead to a self-driving cars.

How do you teach a baby to play ping-pong? With conventional analysis, you’d have to teach a baby when a ball leaves a hand, when the paddle should strike the ball, the impact of the sponge on the paddle on the ball. You’d have to teach the baby Newtonian physics. It requires simulating trajectory, among other things.

JHH shows a wonderful video that shows a dad teaching a one-year-old to play pingpong. The baby’s sitting on the ping pong table and hits back the ball with kind of amazing consistency. He does from time to time get distracted.

“It turns out, that teaching a car to drive itself isn’t that different from teaching a baby to play ping pong, “ NVIDIA CEO Jen-Hsun Huang.

10:38 – JHH now shifts gears, so to speak, to the subject of self-driving cars.

ADAS – advanced driver assistance systems – is used for endeavors like adaptive cruise control, self-braking in case of emergencies.

He asksi, “But stopping a car when you get to an object is far off from self-driving cars. How do we get from here to self-driving cars?”

Our vision is to augment today’s ADAS systems with deep learning. Stopping when an object is ahead is available today. We want to augment this and learn behavior over time to get smarter and smarter.

The big bang of self-driving cars is about to come. The availability of massively parallel supercomputers, the availability of training from cameras like GoPro, we’ll be able to train cars with smarter behavior. This is going to require more than if-then training. We can’t program every possibility. We’ll never get there. Deep learning is what will get us there.

10:34 - To get a closer description of this 10X speedup, you might want to check out our latest blog post, which goes into some detail of how this is derived. There will be a deeper drill down later, as well, available on our Parallel for All technical blog.

10:31 – We’re shifting gears again and going to the promised Roadmap Reveal.

JHH promises that this will address the concern of how much faster can we go.

He cites Pascal, the next generation of GPUs following the current gen, Maxwell, which launched just a year ago. Pascal was initially mentioned at last year’s GTC but without much details. Now it’s being provided.

Pascal has three great features. One is mixed precision, which is provides at 3X the level of Maxwell. Another is 3D memory, which provides more bandwidth and capacity simultaneously. A third is NVLink, which is an ability for multiple GPUs to be connected at very high speeds. It also has 2.7X more capacity than Maxwell.

As a result, Pascal will be 10X faster than Maxwell, although JHH mentions that this is just a very rough, high level estimate.

Live streaming video by Ustream

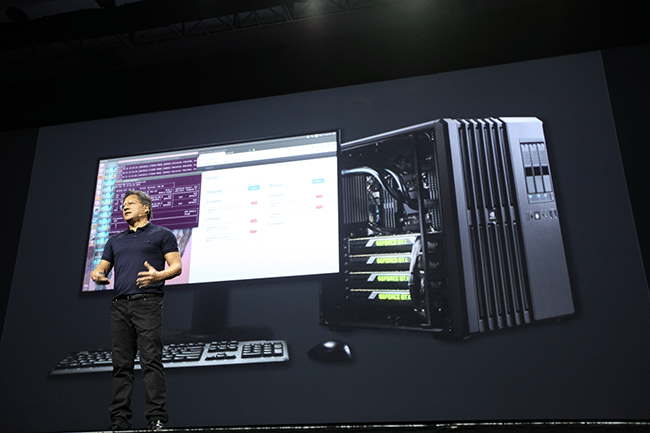

10:24 – JHH rolls out a software offering by NVIDIA called DIGITS, which enables deep learning to be carried out. It can process data, configure a deep neural network, monitor progress along the way and visualize layers.

To package this stuff up, he says that NVIDIA has built a single appliance called the DIGITS DevBox, which plugs into the wall. It’s the maximum GPU performance from a wall socket. It’s packed with TITAN X GPUs, boots up Linux and software that runs deep learning.

Early results have been fantastic, he notes. There are rave testimonials from execs at Flickr and Facebook. DIGITS DevBox isn’t intended to be sold wildly – it’s for researchers, not the mass market. “Researchers can now very quickly get fully up to speed, so you can get back to work and not worry about being a plumber or electrician,” JHH says. Starting price is $15,000, available in May 2015.

“Researchers can now very quickly get fully up to speed, so you can get back to work and not worry about being a plumber or electrician,” NVIDIA CEO Jen-Hsun Huang on the DIGITS DevBox

“It’s priced at a level all researchers can afford.”

10:19 – JHH now talks about some amazing work that’s being done in what’s called “Automated Image Captioning with ConvNets and Recurrent Nets” by researchers at Stanford University.

He introduces Andrej Karpathy, a Stanford researcher behind this work, which is involved in the network “seeing” images and describing them in a simple sentence. The science behind this is unfathomably complicated, but the end result is easy to understand.

He flashes pictures on a screen and shows how the network identifies them based on 500,000 images.

He shows an image of bird on a branch of a tree, which the network identifies as well, “a bird perched on a branch of a tree.” This may lose something in the writing.

- A picture of an Amish horse and buggy is identified as “a man riding a horse drawn carriage down a street.”

- An image of an Amsterdam canal is identified as “a boat is docked in a canal with a large building in the background’

- An image of a flying fish over the sea is identified as “a small white bird flying over a body of water.” It’s stumped the work.

- And now a toddler holding a toothbrush, identified as “a young boy is holding a baseball bat.”

- There’s another try that misidentifies Arnold Schwarzenegger and the former President George H. W. Bush going down a snowy hill on a toboggan, calling them a man and child on a park bench.

Well, JHH did say we’re just at the beginning of this.

10:11 - Mike shows how a computer network is trained by pumping thousands, even millions of images through it. The network gets smarter and smarter until it’s able to identify pictures.

After some pretty deep technical description, Mike finishes up. “This is a sense of what a deep neural network is about,” JHH says. There’s lots of mathematics with iteration upon reiteration.

Anybody who’s a data scientist, anyone who’s trying to predict the future, based on non-computationally-extracted patterns – anyone doing this is jumping into deep learning. The number of companies doing this has exploded. The list of companies doing this is massive. There’s Facebook, IBM, Baidu and Google, among the biggest. And there is a sea of start ups engaging in this. As a result, applications will seem smarter than ever.

Deep leraning is also sweeping through science, he notes. It’s involved in detecting mitosis in breast cancer cells, being done by Switzerland’s IDSIA. It’s used to predict the toxicity of new drugs by Johannes Kepler University in Austria, and understanding gene mutation to prevent disease by researchers at the University of Toronto. “This is all groundbreaking work, and it’s just the beginning,” JHH says.

“This is all groundbreaking work, and it’s just the beginning,” NVIDIA CEO Jen-Hsun Huang.

10:02 – JHH now shows the way a deep neural network works, depicting a multitude of filters that work the way the brain does. Each layer, each filter identifies many different aspects of an image, which ultimately gets recognized by the computer.

There’s a bit of a kerfuffle with the slides that he’s showing, and it turns out he’s a slide ahead of himself. He asks the tech team to back up a slide and then falls back into stride. “One of the best things about GTC keynotes is we don’t rehearse very much,” he says, laughing.

JHH now introduces a colleague, Mike Houston, a PhD researcher at NVIDIA, who gives a description of how a finely tuned version of AlexNet works. Take a picture of a cute little bunny, which gets read by 96 different filters. Dominant features get identified and then put through weight networks. There are some pretty stunning graphics that make it feel like you’re flying through computer network.

Take a picture of a koala or a housecat Mike says. You can put it through the network and see how they’re identified not as a squirrel, not as a stuffed animal, but as what they are.

Live streaming video by Ustream

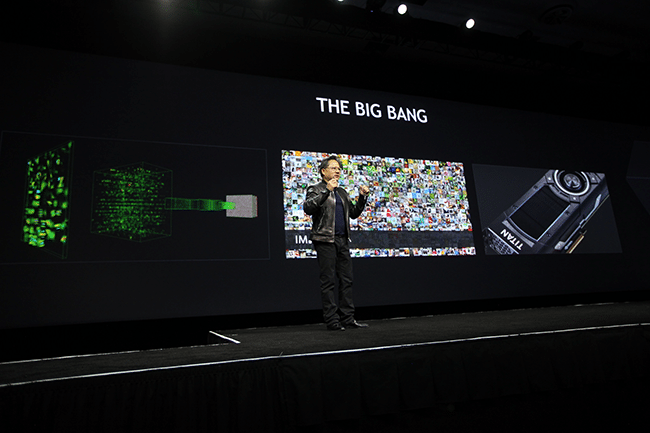

9:54 – In a more recent image-recognition challenge, called ImageNet, the error rate based on using NVIDIA GPUs in a deep network has fallen from just 5.98 percent by Baidu, to 4.94 percent by Microsoft, to 4.82 percent by Google. Any of these is a lower error rate than a human could produce.

There are three reasons for this. Call it the big bang of deep learning, which occurred in 2012. First, is the rise of algorithms in deep neural networks, developed by some of the finest minds in computing. Second, is the rise of huge data sets, which has been possible only with the rise of the Internet, which produces sets with millions of constituents. Third, is the rise of GPU-powered supercomputers, which are able to exert the processing grunt.

9:49 – Now, JHH is on to his second announcement that he promises will be about “a very fast box.”

But first, he provides background about the history of deep learning.

Although its origins go back 50-60 years, deep learning started in earnest in 1995 at Bell Labs by Yann Lecun. He did the initial work in the field, recognizing edges using hand-engineered codes built up over those previous decades. Work done in 1998 developed a ground-breaking method for hand-writing and number recognition, which has replaced humans at banks and other organizations.

More recently, the basis for deep learning moved from computer vision to deep neural networks, and accuracy began improving markedly, thanks to work by Jeff Hinton and Alex Krizhevsky and others in developing AlexNet. In international competition, AlexNet derived accuracy that rose from a previous record of 74 percent accuracy in 2011 to 84 percent in 2012. Now, it is pushing towards 90 percent and beyond.

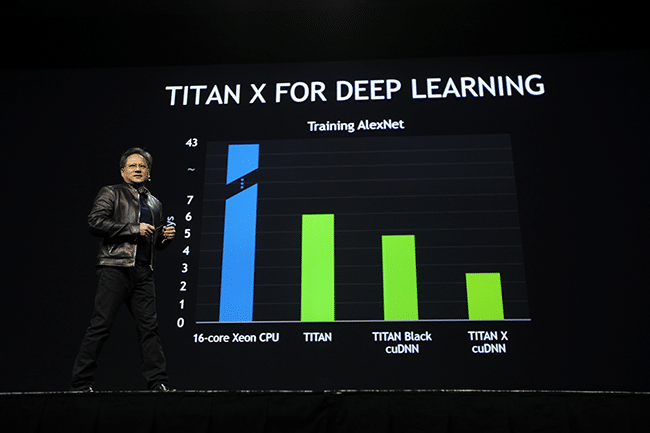

9:40 – But, JHH notes, TITAN X is about more than graphics. It’s also about deep learning.

TITAN X can train AlexNet in under three days, compared with 43 days for a 16-core Xeon CPU, and six days on NVIDIA’s original TITAN GPU from a year and a half ago.

JHH, says, “When you speed something up from a month and a half and you reduce it to a week, it’s the difference between your willingness to do the work and maybe not at all. Down to three days is utterly life changing.”

JHH announces its prize: $999. “It will pay for itself in just one afternoon.”

“It will pay for itself in just one afternoon,” NVIDIA CEO Jen-Hsun Huang on our new GeForce TITAN X

9:37 - Man, it’s an incredibly beautiful animation that looks close to being filmed, but with far more detail than a filmed version could capture. A young boy’s runaway red kite soars through a mountainous valley to the edge of a cave.

The boy recovers the kite at the cave’s edge and then enters it. In an opening, he lets the kite go and it joins dozens of others, swirling in the sky. The tagline comes up, “If you love something, set it free.” Hearty applause comes up.

“That story was impossible to tell without the advancement of computer graphics. When you see it, it takes your breath away,” JHH says.

9:34 – He talks about the importance of acceleration.

“Most of the applications we serve are about speed. Without speed, you can’t do your work. We need to find a delicate balance between programmability and speed.”

He says that by putting CUDA in every single GPU, we make it as easy as possible for you to develop and apply your software. I’d like to show you today how we’re living up to this promise.

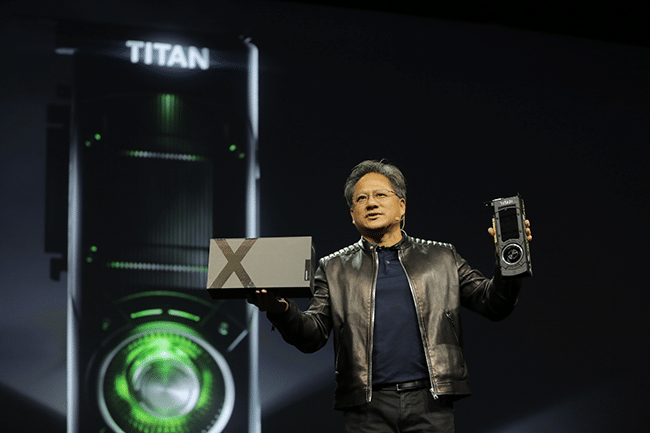

So, he introduces NVIDIA’s latest GPU, TITAN X with a very elegant, highly animated video that’s part Blade Runner, part film noir. TITAN X was first mentioned two weeks ago at the Game Developers Conference, with few details.

Now, he’s specific. It has 8 billion transistors, 3,072 CUDA cores, 7 teraflops of single-precision throughput, and a 12GB framebuffer. It’s based on Maxwell.

He shows what it can do by showing an animation that’s a real-time animation called Red Kite by Epic Games. It’s running on TITAN X. It captures 100 square miles of 3D graphics, depicting a yawning valley with 13 million plants. Any given frame is 20-30 million polygons. It shows physically based rendering – rocks look like rocks, shadows like shadows.

“This is the most beautiful rendering I’ve ever seen.”

9:27 – Now, JHH talks about breakthrough with GPU Computing, thanks to the kind of people who attend GTC.

GTC’s first year was 2009. The prior year, there were 150,000 downloads of CUDA, NVIDIA’s language that allows GPUs to crunch data. There were 27 CUDA apps, 60 universities teaching CUDA and 4,000 academic papers on CUDA, and 6,000 Tesla GPUS.

BY contrast, last year, there 3 million CUDA downloads,319 CUDA apps, 800 universities teaching CUDA, 60,000 papers have cited GPUs and CUDA, and 450,000 Tesla GPUs are in use.

It’s a pretty impressive chart that he shows. “It’s amazing progress in just a few years, and I want to thank all of you for that.”

9:23 – JHH recaps the past year in visual computing – highlighted by breakthroughs in gaming, including game-streaming to the television. Think of it as a Netflix for gaming.

He also cites breakthroughs in cars, which he says are increasingly software and hardware on wheels. The type of experiences we’ll see in near future are inspiring. Nobs, buttons and dials are being replaced with touch, nodding and gestures. Cars will be just one delightful experience rolling down the street.

He also describes breakthroughs in enterprise – with GRID-accelerated VDI, virtual desktop infrastructure, that allows graphics-intensive applications to stream to your connected screen, no matter where you are. NVIDIA technology is being used to make the new “Star Wars,” movie. “I’m out-of-my-body excited about this. I’ll create any technology I can to allow them to make “Star Wars” faster,” he says to laughter.

“I’m out-of-my-body excited about this. I’ll create any technology I can to allow them to make “Star Wars” faster,” – NVIDIA CEO Jen-Hsun Huang

And he describes breakthroughs in deep learning – how for the first time recently a computer was able to beat humans at recognizing images.

It’s been an amazing year in visual computing

9:17 – There’s a rousing cheer. JHH comes out in his trademark black — leather jacket, black pants and shirt.

He says he’s going to cover four things: a new GPU and deep learning; a very fast box and deep learning; a roadmap reveal and deep learning; and self driving cars and deep learning. Is there a pattern here?

Deep learning is arguably as exciting athe invention of the internet, he says. The potential of this technology and this work, and its implications for all industries and science are amazing, he says. The possibilities are endless.

He says he will dedicate his entire talk to deep learning, as much of this show will be dedicated, as much of the work of the next decade will be.

9:12 - The room grows black, and the video comes up. NVIDIA puts massive time – and resources – into creating so-called anthem videos for its signature shows, put together by a richly talented in-house creative studio.

This one’s built on the theme “Do you remember the Future,” a reference to the thousands of researchers in the audience doing work in fields ranging from nano-particles to astronomy, cancer detection to materials-science.

Against a bouncy, slightly nostalgic-tinged soundtrack, it presents a flowing collage of videos with a voice over by the mellifluous Peter Coyote. The vids depict the way the future has been seen in the past – the way adults today remember it being seen in our youth – compared with how technology is being applied today.

There’s the 1960s smile-inducing Jetsons, of course, in flying cars and grainy footage, and the flying DeLorean from the 1980s-era Back to the Future – contrasted with self-driving capabilities being developed by Audi and Tesla. There’s a scene from 1966’s Fantastic Voyage of a crew navigating through the human body – contrasted with an animation of a video-equipped pill being developed by the University of Barcelona that sends images as it wends its way through the digestive tract. There’s a scene of the Star Trek tricorder medical scanning device – contrasted against work being done today with machine learning-based efforts to detect malignancies in cancer diagnostics.

The narrator intones at the end, “Do you remember the future, look around you. It’s here.”

It’s enough to make an aging Trekkie well-up a bit.

9:11 – A hopeful sign. The so-called Voice of God comes up and asks people to be seated. The keynote, she says, will get started momentarily. The music is starting to shift gears, another promising sign.

8:55 – Folks are still streaming in, packing this airplane-hanger sized space. Well, it’s not quite like an elevator car in the morning rush, but there aren’t empty seats. Jen-Hsun – we’ll refer to him as JHH in this live blog – usually comes on within a minute or two of the official start. So, it shouldn’t be long.

8:50 – We’re back in the San Jose Convention Center for the sixth annual GPU Technology Conference.

It’s a big one – 4,000 attendees from 50 countries. There will be 500 sessions this week on how GPUs are pushing research forward on a jillion fronts. And there will be three high-profile keynotes.

But the highlight of GTC each year is invariably Jen-Hsun Huang’s keynote. He’s a great showman with exacting standards and a taste for wildly ambitious demos. Plus, he’s being joined today by Elon Musk, the man behind Tesla Motors and SpaceX.

The room is largely black, with a few poison-green illuminated GPU Technogy Conference signs. The stage itself stretches across the back wall of the massive room. It’s 120×20 feet, the size of a third of a tennis court. Against it is a moving graphic of what appears to be brain neurons with synapses moving through them, like slow-mo lighting.

The post Live: Jen-Hsun Huang Kicks Off NVIDIA’s 2015 GPU Technology Conference appeared first on The Official NVIDIA Blog.