That’s it, gamers. You’ve been replaced.

Google has used a new technology called deep learning to build a machine that has mastered 50 classic Atari video games. And you’ve never seen Space Invaders played like this.

Talk about the way it’s meant to be played.

Of course, no one is coming for your GeForce GTX 980.

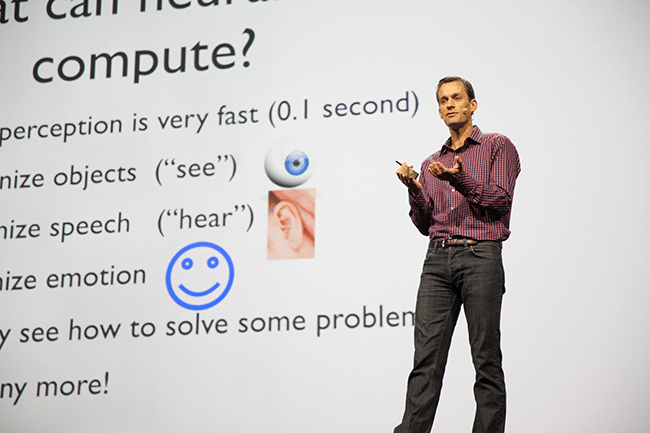

But the same GPU technologies that power your video games are being used by Google to do things few thought would now be possible, Google Senior Research Fellow Jeff Dean explained Wednesday in a keynote speech at our annual GPU Technology Conference.

Clik here to view.

Dean is among a core group of engineers at Google who have built a new generation of technologies that have redefined the infrastructure that underpins the Web.

Now, Dean and his colleagues are pushing into new domains — speech, vision, language modeling, user prediction, and translation — that once seemed only possible in the realm of science fiction. Google’s researchers are even using machines to master classic computer games, like Breakout.

Building Digital ‘Brains’

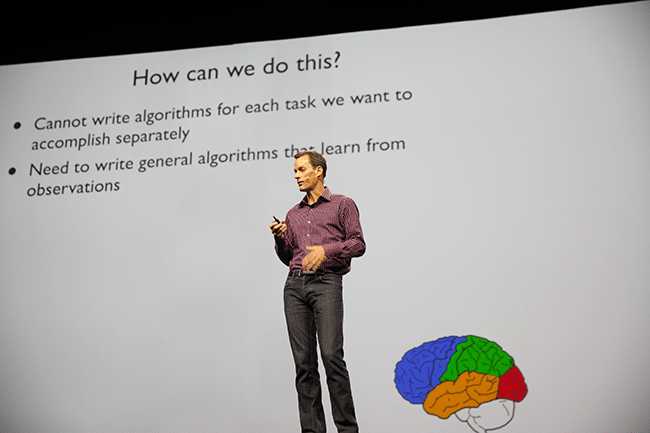

That work is built on creating neural networks modeled on the human brain. But only roughly. Today’s digital brains resemble human ones no more than airplane wings are inspired by the wings of birds.

“We’re not trying to simulate the brain at a very deep chemical transmitter level, we’re taking very high-level abstractions,” Dean said.

Like biological brains, these new digital brains rely on sophisticated algorithms to teach machines to perform complex tasks from scratch, just as a child learns to identify different kinds of balls by being shown many examples.

It may sound simple, but training a computer to learn how to do these tasks saves vast amounts of time. “One of the things we care about is reducing human engineering efforts,” Dean says. “We prefer a deep learning algorithm where the algorithms themselves built up higher levels of abstraction automatically.”

Clik here to view.

Once trained, these models can be embedded into real world applications. Since 2012, for example, Google’s Android smasrtphone software has used deep-learning based predictive speech recognition. The system relies on software built into both Android Jellybean, as well as Google’s powerful servers. Google is now using deep learning in more than 50 production applications, Dean said.

Google is ideally positioned to push deep learning forward. Its search business gives it access to a vast sea of data, in the form of text and images. And the vast distributed computing infrastructure it has built around this business gives it the ability to crunch data in a hurry.

Now, it’s adding GPUs to this infrastructure, giving it the ability to train neural network to tackle a vast variety of tasks in a hurry. The parallel computing capabilities built into GPUs – which are designed to perform vast numbers of tasks at once – allow Google’s engineers to train systems fast.

That lets Google use these systems to do work that wasn’t possible for computers just a few years ago – like identifying house addresses, classifying photos and transcribing speech.

Clik here to view.

“One of the functions of these models that’s incredibly powerful is they can take input in one modality and transform it to another,” Dean said. “Like take pixels and transform them into text.”

Playing Games

The killer demo, of course, involves video games. Dean described the work of a group of colleagues in London who built a deep learning system and set it loose in 50 classic Atari video game and told it to maximize its score.

While the machine struggled at first, after hundreds of games it showed superhuman capabilities. It tore through alien hordes in Space Invaders and slalomed expertly through the curves on Enduro.

“I think it’s time to call the ref,” Dean said as he showed a video of Google’s deep learning system pummeling a hapless opponent in video boxing.

The post How Google Uses GPUs to Revolutionize Speech, Video, Image Recognition appeared first on The Official NVIDIA Blog.

Image may be NSFW.Clik here to view.